Springboard Tutorial: Intro to AWS was originally published on Springboard.

When will demand for a certain product spike during the holiday season? Can we detect fraudulent ordering patterns? How do we reduce wait time in the call center?

These are all questions machine learning (ML) engineers can answer through Amazon Web Services (AWS), a comprehensive cloud computing platform that creates accurate and scalable ML predictions, using data stored in Amazon S3, Redshift, and Relational Database Service (RDS) (RDS is used for MySQL databases).

Want to learn more about becoming a machine learning engineer? Check out Springboard’s comprehensive career guide here.

Getting started with AWS

Amazon Machine Learning (ML) helps you to create and train models using easy-to-learn APIs. When building an ML algorithm, engineers usually follow a few standard steps: collect and transform data, split the data into training and validation sets, find a relevant model, and train the data to retrieve results.

Here are some quick steps from Knowledge Hut to begin using AWS Machine Learning:

- Sign in to AWS and select “Machine Learning.” Launch with Standard Setup.

- Choose and format a data source. Data sources do not actually store the data, but they provide a reference to the Amazon S3 location holding the input data.

- Create a machine learning model. In “Training and Evaluation” settings, choose the default mode to use Amazon ML’s recommended recipe, training parameters, and evaluation settings.

- Build a prediction. Under “ML Model Report,” the option “Try real-time predictions” gives you the opportunity to quickly create prediction results.

AWS tools and services

AWS Machine Learning includes foundational tools like SageMaker, frameworks (including TensorFlow, PyTorch, and Apache MXNet), infrastructure (EC2, Elastic Inference, and AWS Inferentia), and learning tools (AWS DeepLens, DeepComposer, and DeepRacer).

Here are some top services and tools to round out your skillset and apply your learnings in real-life settings:

Sagemaker Studio is a state-of-the-art platform that allows you to wrangle, label, and process data; build your own models with Jupyter notebooks; refine through training and tuning procedures; and deploy in a single click to the cloud. This fully integrated development environment (IDE) detects biases and ensures security through features like encryption, authorization, and authentication. This Medium post provides a deep-dive into SageMaker and its pros and cons.

This console provides automatic speech recognition (ASR) and helps to apply conversational interfaces to applications. You can use the Lex chatbot to add automated options to a call center or mobile application, so customers don’t need to wait to speak to a human agent to change their password or cancel a flight.

This tool extracts data and text from scanned documents and can run off of anything from handwriting to PDFs, forms, and tables. Features include optical character recognition (OCR) and an optional built-in human review workflow, which selects random documents for manual revision.

This service is used for natural language processing (NLP) to identify references to a given topic in a series of text files, which Amazon often stores in a S3 data lake. Comprehend can synthesize customer feedback from product reviews, recommend news content to readers based on past history, classify customer support tickets for more efficient processing, and recruit participants to the right medical trials based on cohort analysis.

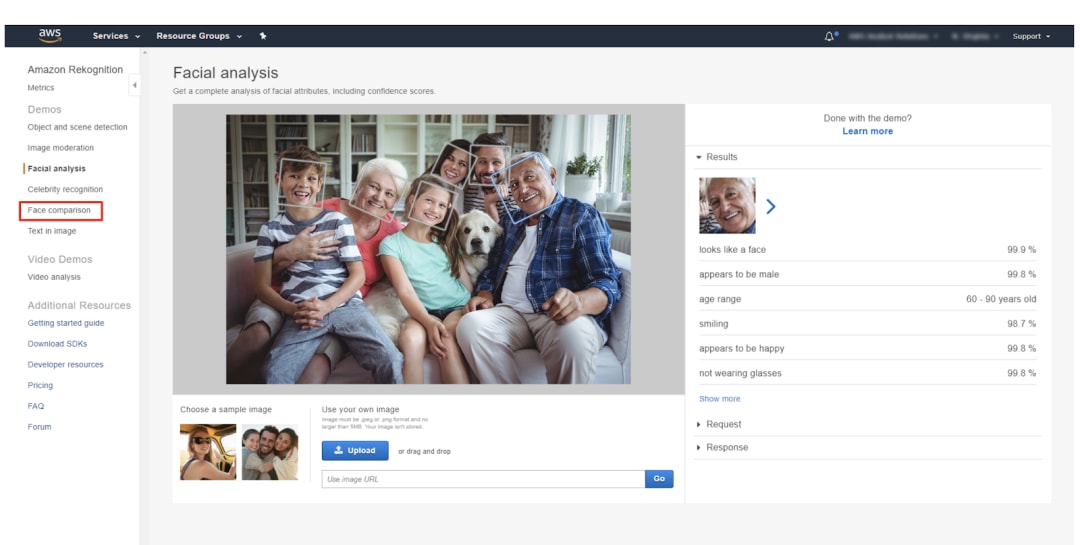

This technology detects objects and faces in images and develops neural network models to label items in a visual search. Through Custom Labels, you can input objects or scenes relevant to your business. Amazon Rekognition will then build the appropriate models to accomplish defined tasks, like identifying household items in pictures, finding a product logo on a store shelf, flagging inappropriate content for families with children, deciphering human emotion based on facial expressions, interpreting hard-to-read text (billboards or handwritten signs), and recognizing celebrities or brand influencers.

This machine learning inference chip is designed to deliver higher throughput and low latency to more easily integrate ML into machine learning applications.

This is a neural machine translation service that uses NLP to convert text from one language to another. Ranked as the top machine learning provider of 2020, Amazon Translate can create websites that appear automatically in a given user’s native language, understand brand sentiment through social media analytics, and scale customer support by translating helpdesk queries into a universal language.

Working with Sagemaker, EC2, and ECS, Amazon Elastic Inference allows you to use GPU-powered acceleration to reduce costs. It can operate with TensorFlow, Apache MXNet, PyTorch and ONNX models.

This is a deep learning enabled video camera that can analyze on-camera action and sync with SageMaker for training models, Polly for speech enablement, and Rekognition for image analysis. You can perform tasks like recognizing different types of daily activities or detecting head and facial movements.

This virtual keyboard can generate music from pre-programmed genres like country, pop, and jazz, which you can then share via Soundcloud.

This 3D racing simulator allows you to compete in the AWS DeepRacer League, the world’s first global autonomous racing league with real prizes. The tiny racing car comes with cameras and sensors, creating an environment conducive to experimenting with reinforcement learning and neural network configurations.

Advantages of AWS for machine learning

There are many benefits to using AWS for machine learning needs. AWS is cost-effective, with API integrations and support for TensorFlow, Caffe2, and Apache MXNet. With AWS, you only pay for what you use and most organizations are billed an hourly rate for the compute time and then for the number of predictions generated. There’s also an AWS Free Tier, which provides access to various machine learning resources for a limited time (usually up to a year).

Although Amazon ML is an easy solution for companies already within the AWS ecosystem because of its interoperability, it does have more limited offerings than players like Microsoft and targets software developers instead of corporate data scientists.

Here’s a solid overview of the pros and cons of AWS vs Azure vs Google Cloud Platform. AWS is known to be the most reliable of all the cloud storage options, with S3 offering eleven nines of durability (or 99.999999999% reliability) and E2 guaranteeing 99.99% uptime. Depending on the server types and whether discounted pricing is used, the main cloud-based providers vary in cost.

Practice with these hands-on examples

Practice makes perfect and there are many free resources to give you hands-on experience with AWS for ML. Here are some examples of problems you can solve!

- Test Amazon Machine Learning on a Human Activity Recognition (HAR) dataset

Convert the input data with Python, and use the boto3 library to generate online predictions. This dataset runs on 10,000 records from smartphone sensors, which classify activities in a numerical system (1 = walking, 2 = walking upstairs, 3 = walking downstairs, 4 = sitting, 5 = standing, 6 = lying down). The Evaluation Matrix below shows the model’s F-score (evaluation metric of 0 to 1) which tests precision and recall. The diagonal pattern shows how likely the activities are to be correctly classified.

- Apply Amazon ML to predict how customers will respond to a marketing offer

You can look at historical customers who have bought products similar to the bank term deposit and build a model that targets the top 3% of customers most likely to take up the offer.

- Take a free deep-dive Coursera course on how to use Amazon SageMaker and Jupyter Notebooks

This also covers Amazon Comprehend, Translate, and DeepLens, with practice exercises.

- Create a custom bot for booking car reservations with Amazon Lex

You can train the chatbot on some sample utterances like “Book a Car” or “Make a Reservation” that trigger the booking workflow and specify different response cards.

- Compare and analyze faces with Amazon Rekognition

You can upload a sample image and detect emotions like happy, confused, and calm, with different confidence intervals. You can also check to see if the people in other images correspond with a given reference image.

- Extract text from a Base64 image, S3 bucket image, and S3 bucket document with AWS Textract

Do this using AWS Lambda and Python. This will eventually allow you to generate a new txt file based on text extracted from a given PDF.

- Use the Amazon Translate API to translate a speech script from English to French

Create a new scene and a main entity. You can then change the “Script” to “Speech,” edit in Text Editor, and write code to associate the host voice ID with the right language code.

- Build a job entity recognizer with Amazon Comprehend

Build it so it extracts information on required skills, degrees, and majors from job descriptions by employing Named Entity Recognition (NER). Afterward, you can employ the UBIAI annotation tool to annotate job descriptions and train a model using the Custom Entity Recognizer (CER), so that Comprehend can automatically extract skills, diploma, and diploma major from an inputted job description.

Glossary: key AWS concepts

Here is some key terminology for MLE to better leverage the AWS tools.

Datasource: When you input data, Amazon ML stores all the details, identifying the attributes (unique, named properties), like cost, distance, color, and size, which often comprise the column headings of a CSV file or spreadsheet. You can then create interactive mathematical models and find patterns in the metadata.

Models: Let’s take a look at the different types of models that AWS can build for machine learning:

- A binary classification model leading to one of two results (yes or no)

- A multi-class classification model that can predict different conditions (purple, green, red, blue, yellow)

- A regression model that results in an exact value (e.g. how many burgers will the average customer order at a restaurant on a single visit?).

You will need to decide on the model size (more patterns = larger model), number of passes (determines how many times Amazon ML can run the same data records), and regularization (getting the model complexity right to avoid overfitting).

Evaluations: To understand how well your model is performing, you’ll need to familiarize yourself with terms like AUC (area under the ROC curve), macro-averaged F1-score, Root Mean Square Error (RMSE), cut-off, accuracy, precision, and recall. This assesses the quality of your model and how accurately it will predict outcomes.

Batch Predictions: Batch predictions allow several observations to happen simultaneously.

Real-Time Predictions: With real-time predictions, you can send a request and ask for an immediate response. The Real-Time Prediction API may be well suited for web, mobile, or desktop applications with low latency requirements.

Is machine learning engineering the right career for you?

Knowing machine learning and deep learning concepts is important—but not enough to get you hired. According to hiring managers, most job seekers lack the engineering skills to perform the job. This is why more than 50% of Springboard’s Machine Learning Career Track curriculum is focused on production engineering skills. In this course, you’ll design a machine learning/deep learning system, build a prototype, and deploy a running application that can be accessed via API or web service. No other bootcamp does this.

Our machine learning training will teach you linear and logistical regression, anomaly detection, cleaning, and transforming data. We’ll also teach you the most in-demand ML models and algorithms you’ll need to know to succeed. For each model, you will learn how it works conceptually first, then the applied mathematics necessary to implement it, and finally learn to test and train them.

Find out if you’re eligible for Springboard’s Machine Learning Career Track.

The post Springboard Tutorial: Intro to AWS appeared first on Springboard Blog.